- #Nvidia docker ubuntu 18.04 cuda container install#

- #Nvidia docker ubuntu 18.04 cuda container drivers#

- #Nvidia docker ubuntu 18.04 cuda container driver#

- #Nvidia docker ubuntu 18.04 cuda container full#

To get the latest RAPIDS version of a specific platform combination, simply exclude the RAPIDS version. The tag naming scheme for RAPIDS images incorporates key platform details into the tag as shown below: 22.02-cuda11.0-runtime-ubuntu18.04

#Nvidia docker ubuntu 18.04 cuda container full#

#Nvidia docker ubuntu 18.04 cuda container install#

tmp/apt-dpkg-install-dozryj/082-nvidia-utils-440_440.33.01-0ubuntu1_bĮ: Sub-process /usr/bin/dpkg returned an error code (1)Īttempting to apt -fix-broken install also returns an error with Preparing to unpack. The installation fails at the last step with: Errors were encountered while processing:

#Nvidia docker ubuntu 18.04 cuda container drivers#

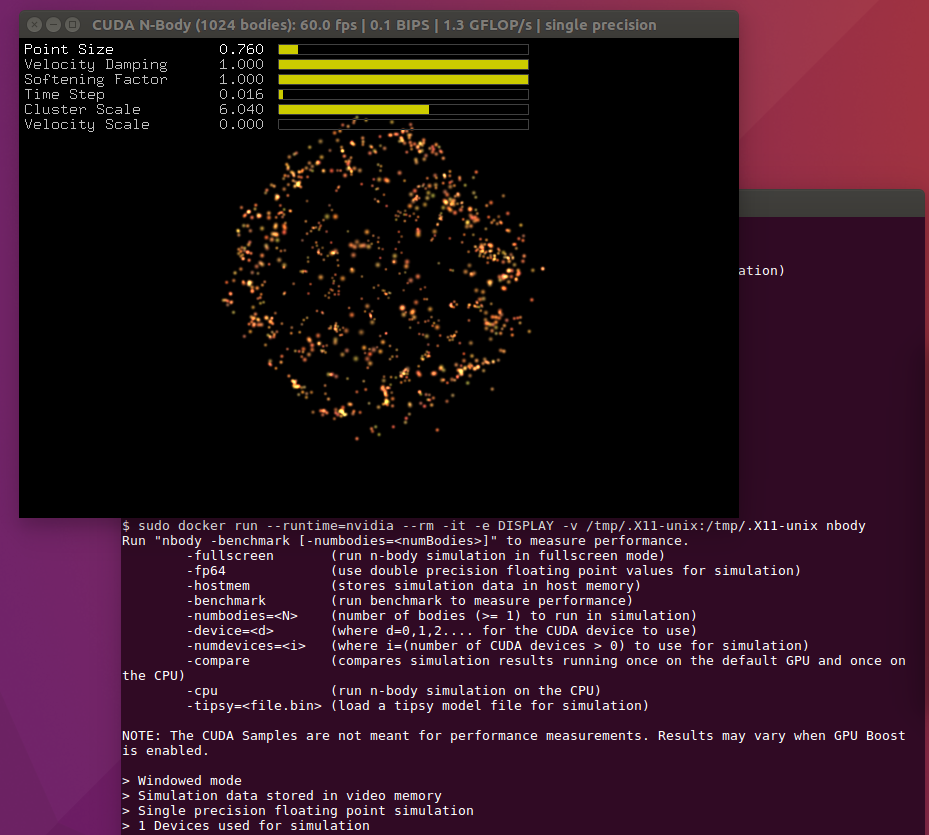

To that aim I’m installing the drivers by following what’s presented at Installation guide Linux::CUDA Toolkit Documentation, selecting the CUDA Toolkit 10.2 deb(local) installer instead of the 11.4 one. The container is run with the -gpus all flag and nvidia-smi returns the expected values from inside it. However, I’m now trying to use the CUDA drivers inside a container with a different base image (already quite heavy on its own, seems unfeasible to multistage that over a nvidia base), which needs CUDA 10.2 to compile stuff in it.

#Nvidia docker ubuntu 18.04 cuda container driver#

The CUDA version installed on WSL is the 11.4, with driver version 471.21. I followed the steps at CUDA on WSL to install the Nvidia drivers, which are up and running, as is nvidia-container-toolkit and docker-ce (20.10.8), since the test containers presented there gave no issue. As per the title, I’m trying to install CUDA libraries (10.2) in a Docker-CE container with Ubuntu 18.04 running on WSL2, with no luck.

0 kommentar(er)

0 kommentar(er)